(First posted in April 2019)

Surveys deliver valid and useful information if they are done well and for the right reasons.

Specifically, surveys are a good instrument for predicting near-term consumer preferences, although the accuracy degrades as the forecast horizon expands.

Every once in a while, you’ll see someone in the avant garde marketing circles bashing surveys with antivaxxer enthusiasm. For example, Philip Graves, "a consumer behaviour consultant, author and speaker," takes a dim view of market research surveys in his 2013 book Consumerology. Graves writes that "attempts to use market research as a forecasting tool are notoriously unreliable, and yet the practice continues."

He then uses political polling as an example of an unreliable forecasting tool. He does not elaborate beyond this one paragraph:

Opinion polls give politicians and the media plenty of ammunition for debate, but nothing they would attach any importance to if they considered their hopeless inaccuracy when compared with the real data of election results (and that’s after the polls have influenced the outcome of the results they’re seeking to forecast (Consumerology, p178)

If anything, electoral polling proves that asking people about their preferences is a reliable and reasonably accurate indicator of their actual behavior. In election polling, there's nowhere to hide. The data and the forecasts are out there, and so, eventually, are the actual results. And so, every two and four years, we all get a rare chance to evaluate how good surveys are at forecasting people's future decisions.

Horse race polls ask exactly the kind of question that people, according to critics, should not be able to answer accurately. Here's how these questions usually look:

If the presidential election were being held TODAY, would you vote for

- the Republican ticket of Mitt Romney and Paul Ryan

- the Democratic ticket of Barack Obama and Joe Biden

- the Libertarian Party ticket headed by Gary Johnson

- the Green Party ticket headed by Jill Stein

- other candidate

- don’t know

- refused

(Source: Pew Research's 2012 questionnaire pdf, methodology page)

Here's a track record of polls in the US presidential elections between 1968 and 2012. FiveThirtyEight explains: "On average, the polls have been off by 2 percentage points, whether because the race moved in the final days or because the polls were simply wrong."

Source: FiveThirtyEight, November 2016

On average, you can expect 81% of all polls to pick the winner correctly.

Source: FiveThirtyEight, June 2016

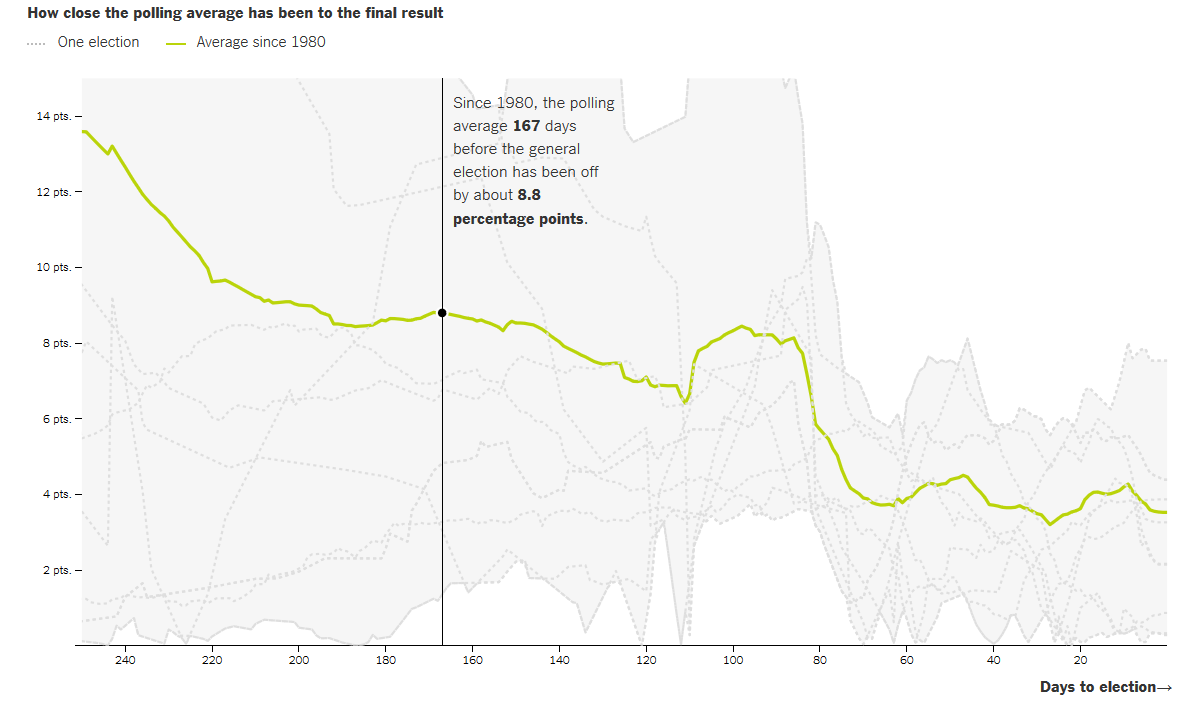

The closer to the election day polls are conducted, the more accurate they are.

"The chart shows how much the polling average at each point of the election cycle has differed from the final result. Each gray line represents a presidential election since 1980. The bright green line represents the average difference." (NYTimes, June 2016)

What about the 2016 polls? The final national polls were not far from the actual vote shares.

"Given the sample sizes and underlying margins of error in these polls, most of these polls were not that far from the actual result. In only two cases was any bias in the poll statistically significant. The Los Angeles Times/USC poll, which had Trump with a national lead throughout the campaign, and the NBC News/Survey Monkey poll, which overestimated Clinton’s share of the vote." (The Washington Post, December 2016)

So why then was Trump's win such a surprise for everyone?

"There is a fast-building meme that Donald Trump’s surprising win on Tuesday reflected a failure of the polls. This is wrong. The story of 2016 is not one of poll failure. It is a story of interpretive failure and a media environment that made it almost taboo to even suggest that Donald Trump had a real chance to win the election." (RealClearPolitics, November 2016)

In an experiment conducted by The Upshot, four teams of analysts looked at the same polling data from Florida.

"The pollsters made different decisions in adjusting the sample and identifying likely voters. The result was four different electorates, and four different results." In other words, a failure to interpret the data correctly."

Source: The Upshot

(Here's a primer on how pollsters select likely voters.)

Nate Silver's list of what went wrong:

- a pervasive groupthink among media elites

- an unhealthy obsession with the insider’s view of politics

- a lack of analytical rigor

- a failure to appreciate uncertainty

- a sluggishness to self-correct when new evidence contradicts pre-existing beliefs

- a narrow viewpoint that lacks perspective from the longer arc of American history.

In other words, when surveys don't work, “you must be holding it wrong”.